The Three Phases of AI-Assisted Coding

Most developers approach AI coding assistants backwards. They open their IDE, type "write me a function that does X," and expect magic. When the AI produces something that doesn't quite fit, they blame the tool. "AI coding is overhyped," they say after a frustrating week of wrestling with generated code that keeps missing the mark.

But here's what I've learned after using AI coding tools daily since 2023: the problem isn't the AI. It's that most people skip straight to implementation without doing the crucial work that makes implementation trivial.

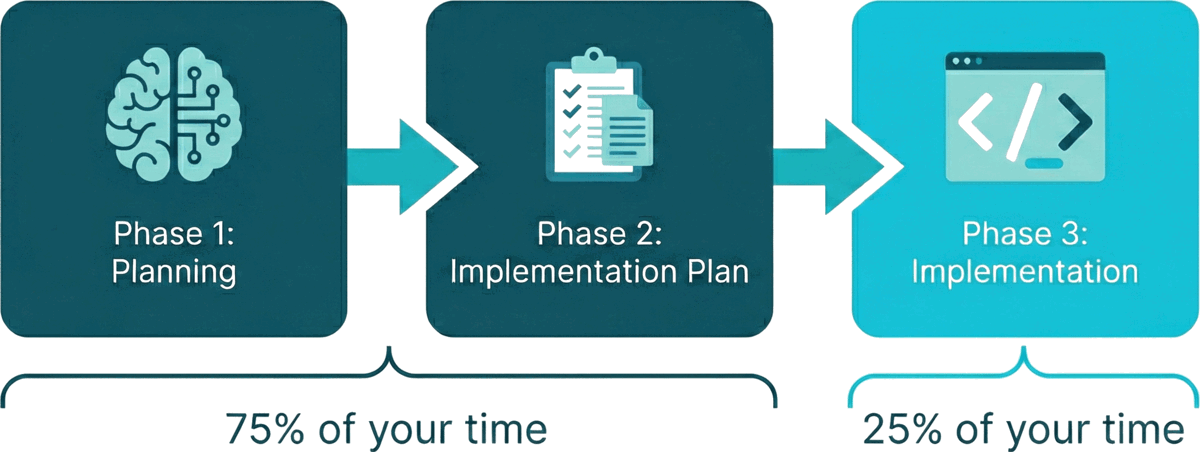

The insight is simple: separate thinking from doing. AI-assisted coding works best when you treat it as three distinct phases, not one. And if you're doing it right, 75% of your time should be spent in the first two phases. The actual coding should be the easy part.

The Three Phases

Phase 1: Interactive Planning

This is where most people skip ahead, and it's exactly where you should slow down.

In the planning phase, you're not asking the AI to write code. You're having a conversation. You're exploring the problem space together, considering different approaches, and making architectural decisions. The AI becomes your thought partner, not your code generator.

I explicitly ask the AI to ask me high-information questions. Instead of the AI just proposing solutions, I have it research the codebase and relevant sources, then ask me questions that will shape the direction. I provide answers, it researches more, asks more questions, and we iterate until we've thoroughly explored the problem.

This back-and-forth is where the real value happens. You're injecting your expertise and project context into the planning process. The AI brings broad knowledge and can spot things you might miss. Together, you arrive at a much better plan than either would alone.

This kind of deep, exploratory work benefits from high-reasoning models. Mistakes at this stage are expensive. If you have to restart mid-implementation because you missed something, or worse, you ship a bug because you didn't think through an edge case, you'll wish you'd spent more time here.

Here's the prompt I use:

Before we start implementing anything, I want you to thoroughly research the codebase, any relevant documentation, and best practices from reliable sources on the web. Then ask me high-information questions to help decide the best approach and how to prioritize the work.

For each question:

1. Ask the question

2. Provide your reasoning on how you would answer it

3. Give your recommendation

Focus on strategic questions, not implementation details. We're planning, not coding yet.

When you have no more high-information questions, tell me and wait for my next instruction.

A note on scope: When I'm iterating rapidly toward an MVP or prototype, I add a line to this prompt telling the AI to keep things simple and focus on high-ROI changes. This keeps the AI grounded when you just need something that works. If you're building enterprise software with long-term maintenance in mind, you might skip this constraint.

The magic is in that last line of the prompt. The AI signals when it's done exploring, and you decide when to move to the next phase. You stay in control of the process.

Phase 2: Implementation Plan Creation

Once you've explored the problem and made your key decisions, it's time to create a detailed implementation plan. This is still not coding. This is creating the roadmap that makes coding straightforward.

Write the implementation plan assuming the agent that implements it will have zero context from your planning conversation. This might seem redundant, but it's essential. You're going to hand this plan to a fresh AI session (or a future version of yourself), and it needs to stand alone.

I have the AI write the plan to a markdown file in my repo with:

- The current state of the relevant code and any important context upfront

- Step-by-step tasks that can be checked off

- Enough detail that each step is unambiguous

- Checkpoints after major features where you pause to test, lint, and git commit your changes before moving on

Like Phase 1, this still benefits from high-reasoning models. You're still thinking, not doing.

Here's the prompt I use:

The plan should:

- Start with the current state of the relevant code and any important context

- Break the work into clear, sequential steps

- Include enough detail that someone with no context from this conversation could execute it

- Add checkpoints after major features where we should pause to test, verify everything works, and git commit before continuing

- Use checkbox format so progress can be tracked

Be thorough but don't write the actual code. The goal is a roadmap, not implementation.

The checkbox format matters. As you implement, you (or the AI) check things off and can add notes. It becomes a living document that tracks progress.

Phase 3: Implementation

Now, finally, you write code. But if you've done Phases 1 and 2 well, this phase should feel almost effortless. The hard decisions are made. The plan is clear. You're just executing.

Start a fresh chat session. Point the AI at your implementation plan file and nothing else. Then tell it to follow the plan.

Before each change, study the relevant code thoroughly. Make minimal, precise changes. After completing each step, mark it done in the plan.

Start with step 1.

Because the plan is comprehensive and context-free, the AI doesn't need to understand the full history of your planning discussions. It just needs to follow clear instructions. And when everything clicks, you can sit back and watch as the AI works through the plan, checking off steps as it goes. Before you know it, you're done. That moment never stops feeling a little bit magical.

Since the thinking is already done, this phase works fine with lighter, faster models. You're optimizing for speed and cost now, not deep reasoning.

The 75/25 Rule

If you're spending most of your time in Phase 3, you're doing it wrong.

Phases 1 and 2 (planning and plan creation) should take roughly 75% of your time on a feature. Phase 3 (implementation) should be about 25%. When implementation feels hard or the AI keeps producing code that doesn't fit, that's a signal your plan wasn't detailed enough. Go back and improve it.

This feels counterintuitive. We want to see code happening. Planning feels like we're not making progress. But the time you invest in planning pays off many times over in smoother implementation and fewer rewrites.

The Takeaway

AI coding assistants are powerful, but they're not magic code generators. They're thought partners that become code generators. The developers who get the most value from these tools are the ones who lean into the partnership, who use the AI to think through problems before asking it to write solutions.

Separate thinking from doing. Plan before you implement. And when implementation feels easy, you'll know you did the planning right.

I teach workshops on AI-assisted development techniques, helping teams build effective workflows with these tools. If you're interested in leveling up how your organization uses AI for coding, let's talk.

Tags

Related Posts

Dr. Randal S. Olson

AI Researcher & Builder · Co-Founder & CTO at Goodeye Labs

I turn ambitious AI ideas into business wins, bridging the gap between technical promise and real-world impact.